If you want your website to use HTTPS you'll need an SSL certificate. Read on to find out what this all means, starting with a bit of history.

HTTP

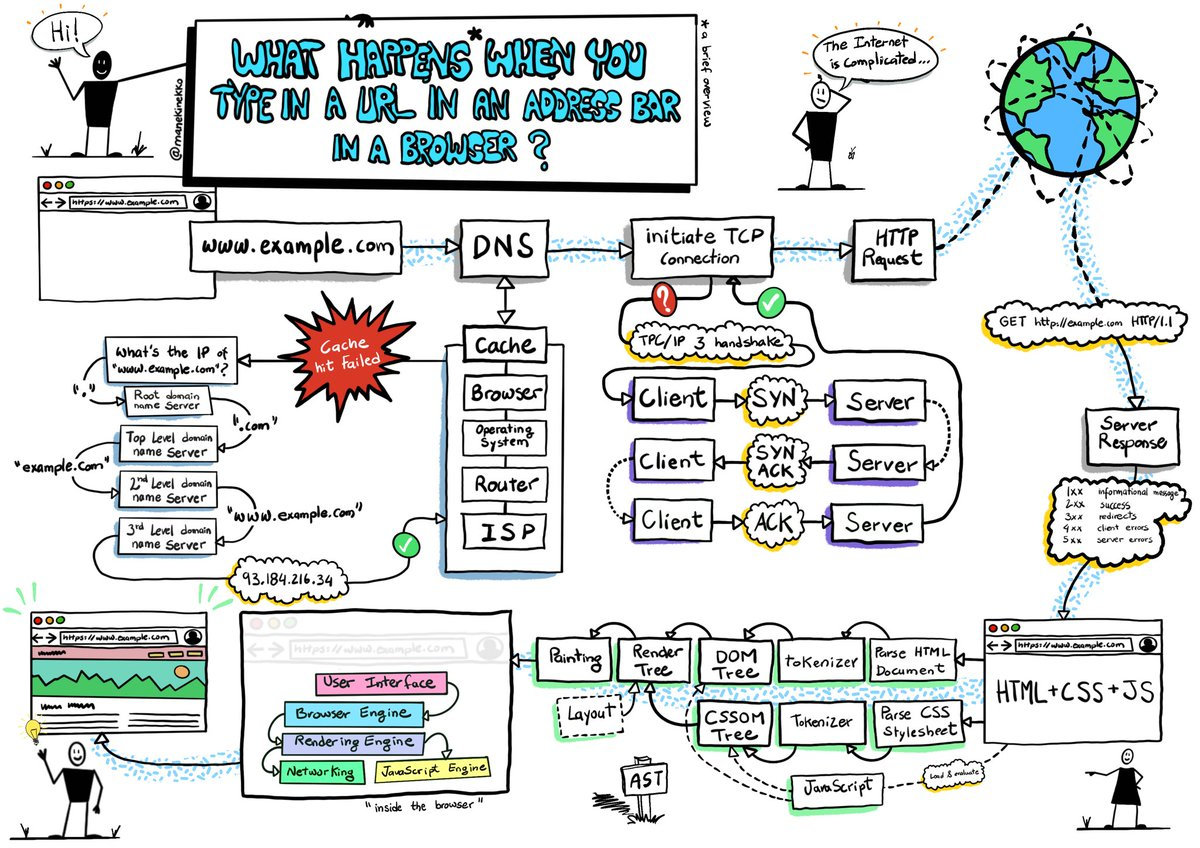

When Tim Berners Lee launched the first ever website in 1991 it was accessible using a protocol called HTTP (Hypertext Transfer Protocol). The protocol is very simple and describes how a client (a web browser, for instance) can acquire a hypertext document (a web page) from a remote server when it is supplied with a document address like this: http://smallworkshop.co.uk/2022/01/24/ssl-certificates. This protocol is still used today.

Back then web data was delivered over the internet as plain text and could therefore read by anyone you were sharing the network with.

To understand why this was a problem we just need to look at how the network is organised. As shown in the picture below, there is a lot going on when you communicate with a web server over the internet and data can be passed between dozens of servers before it reaches its destination:

The internet was designed to allow people all over the world to add machines into it without any kind of vetting or approval process. The same openness that has allowed it to scale to billions of machines also means that your data is being passed around on computers and networking devices that may not be safe.

HTTPS

It soon became clear that many of the services people wanted on the web, like e-commerce and banking, could not be done safely over an unsecure connection and by 1994 Netscape had created a new protocol that established an encrypted link between the client and web server. They called the new protocol Secure Sockets Layer (SSL). There were problems with the initial version but improvements were quickly made and the 3rd version of the protocol, which by then had been renamed TLS[1], was used to extend HTTP so that it could operate over a secure communication channel. Using HTTP in this way became known as HTTP over TLS or HTTPS (Hypertext Transfer Protocol Secure).

If the web was designed from scratch today encrypted communications would almost certainly be required by default but, back in the 1990s, the cost and inconvenience of implementing HTTPS meant that it was primarily adopted on websites that handled money, like online banks and shopping sites.

The subsequent realisation that it was needed across the entire web has left us in a bit of a mess, but before we get into that let's see how HTTPS works.

To determine if a communication channel is secure, whether analogue or digital, you need to answer three questions:

- is the person (or server) I'm talking to who they claim to be?

- can anyone tamper with the messages being exchanged?

- can anyone eavesdrop on the conversation?

HTTPS aims to answer all three questions - often referred to as authenticity, integrity and confidentiality - as explained below.

What HTTPS does not do

At risk of stating the obvious, knowing you can communicate securely with someone is not the same as knowing you can trust them. Your conversation with Mr Big may well be private, but you still need to be careful what you tell him.

The same logic applies to computers: when you are using a secure connection to a website it just means the data is exchanged in private. It does not automatically follow that you should trust the website to use your data responsibly.

Cryptography

Before the term "crypto" was hijacked by ne'er do wells flogging cryptocurrencies and dodgy NFTs it simply meant "cryptography".

We encrypt data to protect its confidentiality: only the person with a secret key can unlock the encryption and read the original text.

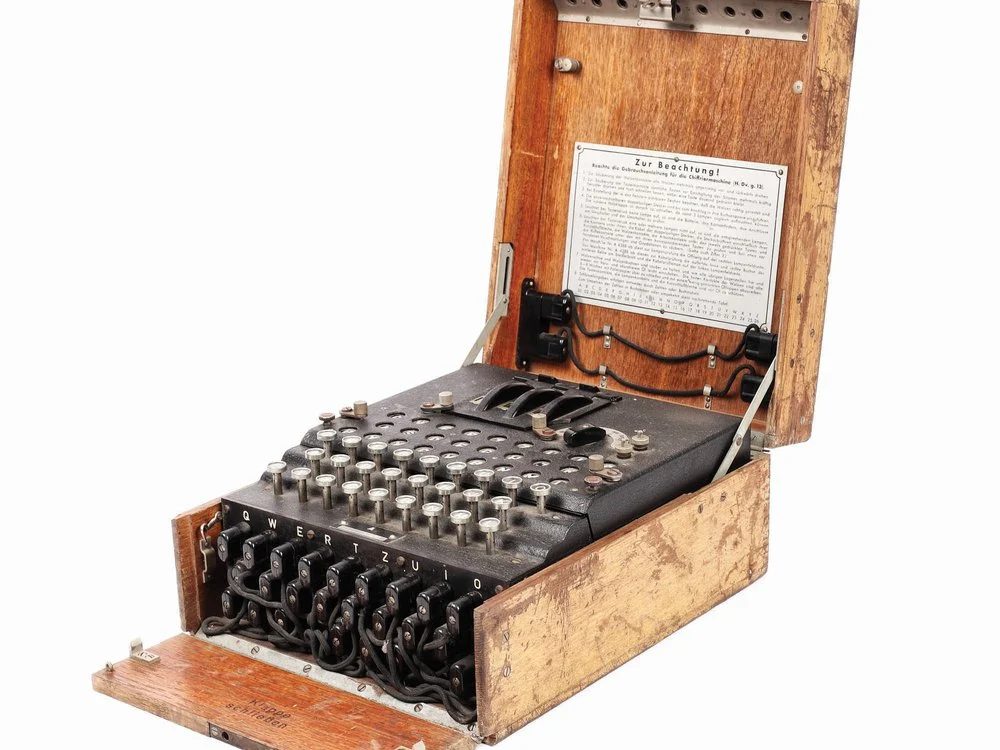

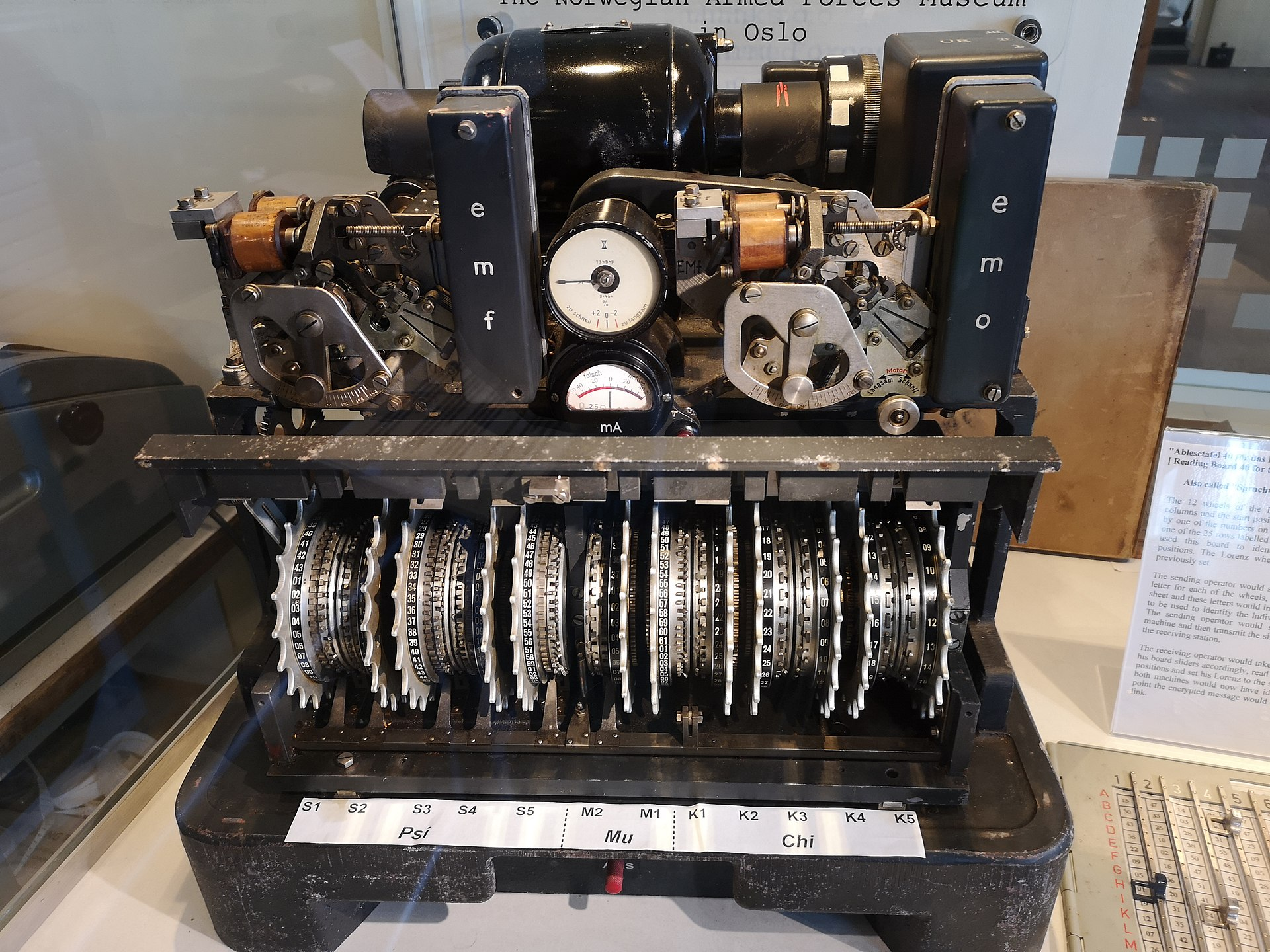

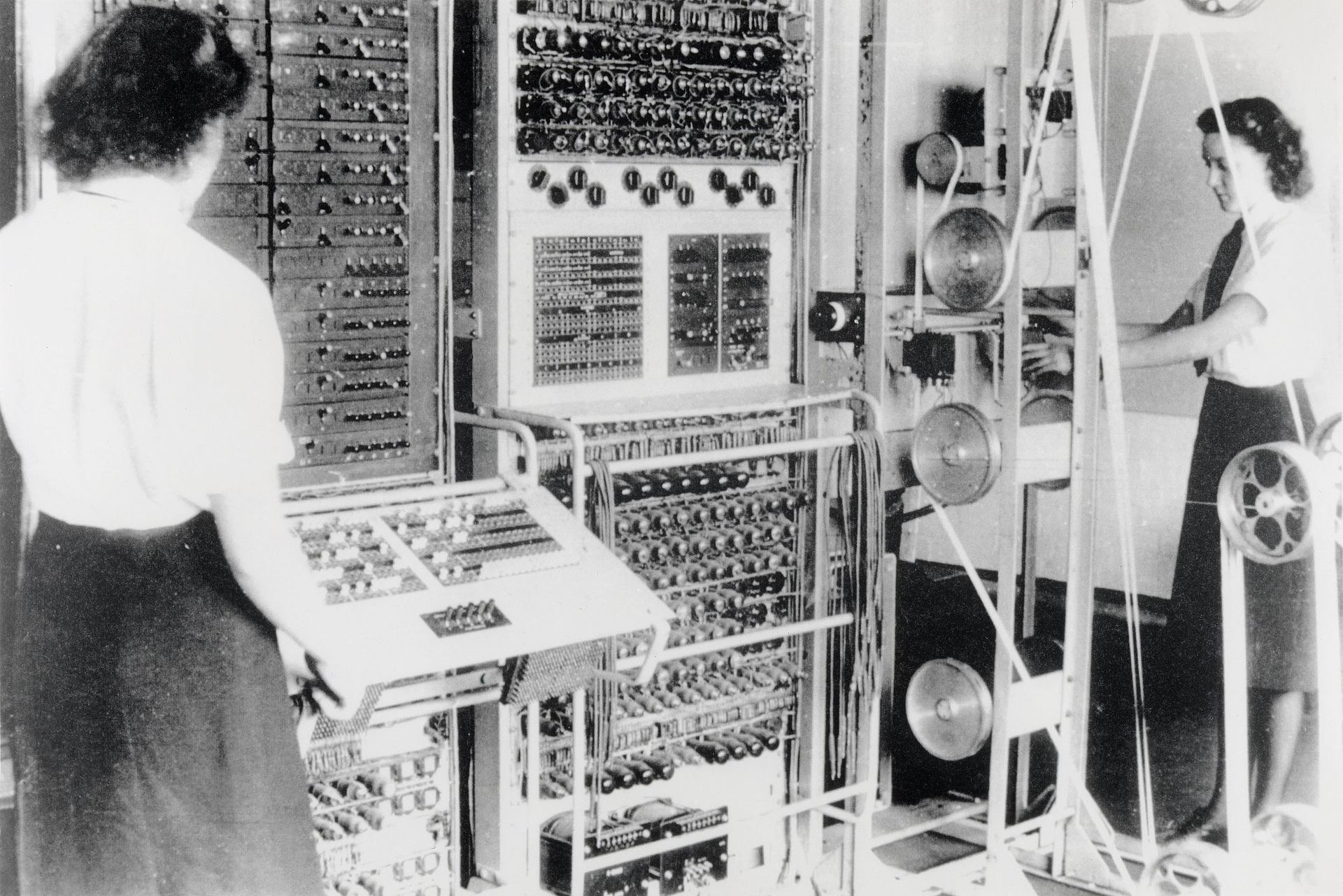

Use of the term cryptography goes back to the 17C but the practice of using secret codes to scramble messages goes back thousands of years. The Caesar Cipher is one of the earliest known and was used by Julius Caesar to send secret messages to his generals. Early ciphers were very simple (Caesar shifted each letter of his message three letters to the right to produce the scrambled text) but over time they became more sophisticated and by the middle of the 20th century were being generated on complicated machines like the Enigma and Lorenz machines used by Germany in the war.

An Enigma machine (left), A Lorenz SZ42 (center) and the the Colossus (shown on the right, picture thought to be from 1944). The Colossus was the world's first programmable computer created at Bletchley Park as part of their efforts to crack the Lorenz cipher during the war.

Cryptographers call these types of cipher symmetric because the same key is used to encrypt and decrypt the messages. A weakness of these methods is that they require the sender and recipient to find a way to communicate the secret key to each other. For instance the settings for the enigma machines were written in a code book that had to be taken by courier to each of the radio communication teams in the field and, if these books were lost or stolen, all the messages would be exposed.

With the right precautions these risks can be managed between small numbers of participants, but how would it work on the internet where there are potentially billions of exchanges that need to be secured between parties with little or no opportunity to meet and swap secret codes in private?

Public Key Cryptography

The answer came from a small team of academics - Diffie, Hellman and Merkle - who suggested the possibility of public key cryptography in 1976.

It subsequently turned out that this invention had been discovered independently by GCHQ - the UK equivalent of the NSA in the US - several years earlier. Since the information was classified GCHQ did not share it until 1987 when they concluded that continued secrecy would have no further benefit. At this point they permitted the author of the original papers, James Ellis, to publish them[2]:

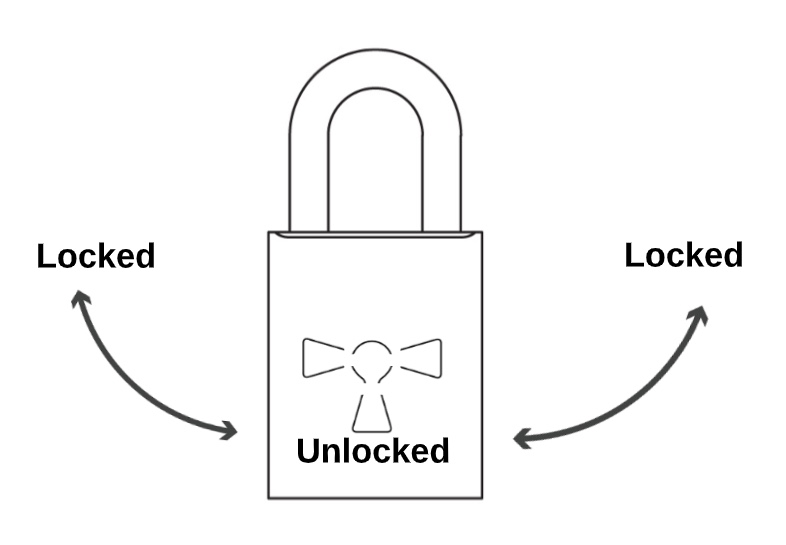

This important discovery plays a crucial part in the operation of the web today. The mathematical details are beyond me, so we will have to make do with an analogy to explain the ideas behind its operation. We are to imagine a special padlock with three key positions: it is locked when the key is turned clockwise to the left, or anti-clockwise to the right, and open when the key is in the central vertical position:

The lock can be operated by two types of key: the first key can only turn anti-clockwise and must be kept private. The second key can be copied and shared with anyone (we'll call this the public key) but can only turn clockwise.

Using public keys to ensure confidentiality

This type of lock has a couple of interesting applications. Suppose I need you to send me a message that must be kept secret. In this case I could send you an open lock and a copy of the public key and ask you to secure the message in a box by attaching the lock and turning the key to leftmost position.

If the message is intercepted by someone who has a copy of the public key they can't open the lock because it is already in the leftmost position and these keys won't turn anti-clockwise. When I receive the message, however, I can use my private key to turn the key to the right and thus open it. We have therefore been able to ensure the confidentiality of a message shared over an unsecure network (in this case the postal network) without having to first share any secret information.

Integrity

Suppose I need to send you a copy of a contract that we have agreed verbally and one of us is worried that it my be intercepted and tampered with before you receive it. In this case I could lock the message with my private key by turning it to the right. The message would no longer be secret - because anyone with a public key could open the lock and read the message - but, because only the private key holder can put the lock in this position, you can be confident that no one has altered the message if you can open it with the public key.

In the digital world it is often inefficient to encrypt entire documents just to ensure they are not tampered with so another mathematical algorithm, called a hash[3], is used to create a short digest of the document and this is then encrypted with a private key. On receipt of the message you can apply the same hashing algorithm to the supplied document and - assuming the result is the same as the decrypted hash of the message digest - you can be sure the message is intact.

When a private key is used to encrypt a message hash in this way it is referred to as a digital signature.

Authenticity

If you can unlock an encrypted message with a public key then you can be confident that the message was encrypted with the corresponding private key. But how do we know the public key is the genuine? Because public keys are supplied over an unsecure network there is a risk that an attacker might intercept the genuine public key and substitute it for a public key of their own.

Unfortunately, the possibility that we might be tricked into using the wrong public key is a serious problem: should an attacker succeed in doing this they could intercept any future correspondence, use their own private key to read it, and then pass it on to the intended recipient using the recipient's legitimate public key to encrypt it. In this case neither the recipient nor the sender would be aware that a "man in the middle" was snooping on their correspondence.

In the real world we would probably try and solve this problem by finding a mutual acquaintance who could be trusted to safely handle the initial delivery of the public key. As we shall see, a similar thing happens in the digital world thanks to the systems and standards that permit the creation and distribution of SSL certificates (the so called Public Key Infrastructure).

SSL/TLS

As mentioned above the protocol used to secure HTTP traffic is the Transport Layer Security protocol (often referred to using the old name for the protocol, SSL, or sometimes SSL/TLS).

When a client contacts a server using HTTPS a series of interactions defined by the TLS protocol, called a handshake, are initiated. The handshake has a number of steps as shown in this representation of TLS1.2[4], but the end result is that the client and the server can safely exchange a shared secret that can be used to encrypt the rest of the communications[5].

SSL certificates

As part of the handshake process the server passes the client details of the SSL certificate for the website.

The certificate is a small file installed on the server that contains:

- the domain name of the web site

- the date the certificate was issued and the date it will expire

- The site's public key

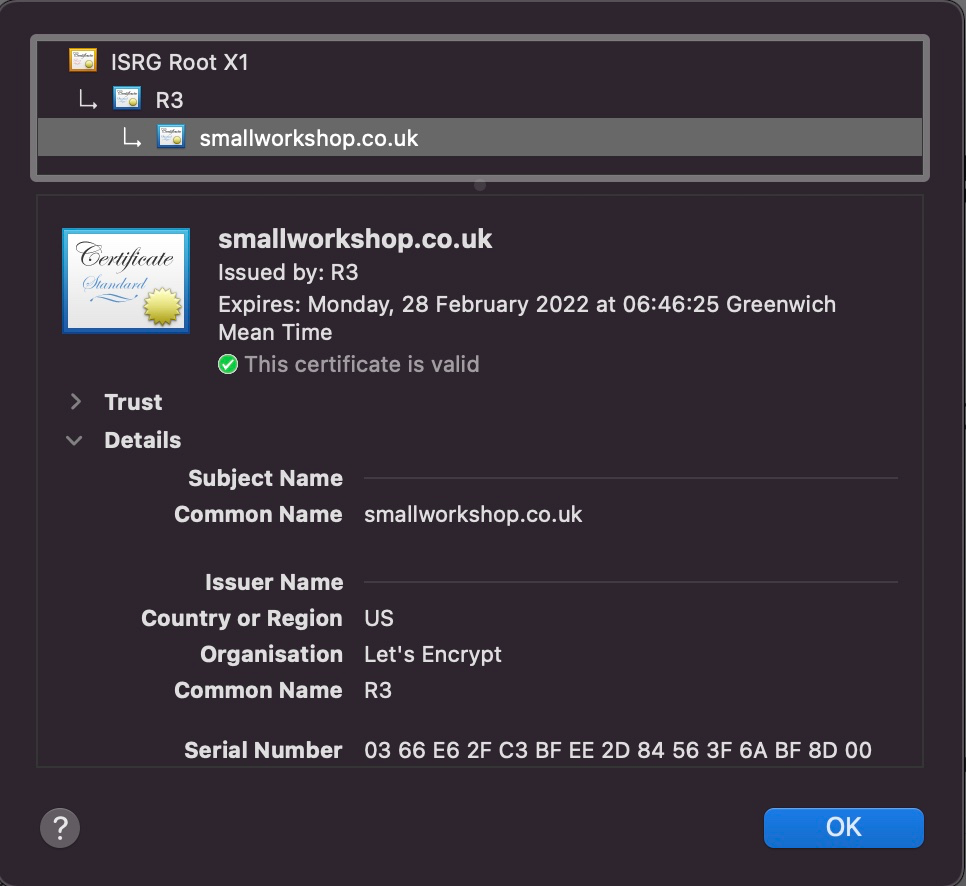

You can view details of a website's certificate by clicking on the padlock sign shown next to the domain name in your browser. Here are details of the certificate installed on this site:

Certificate verification

Although certificates are encoded in a binary format they are basically just text files and can be made (and faked) by anyone, so an additional step is needed to confirm that the certificate is genuine.

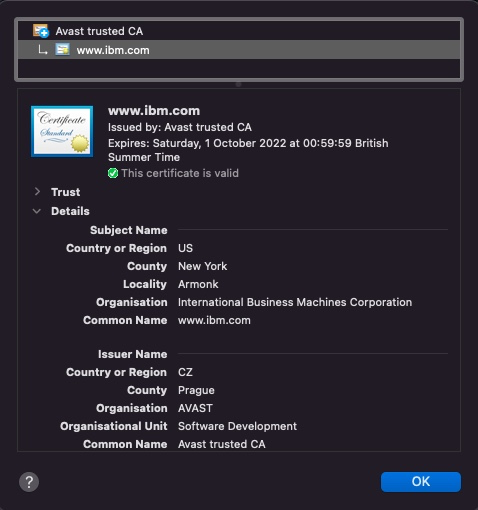

Imagine that someone sharing the same network as you has made a fake certificate claiming to be the owner of the website you were planning to visit and that they had control over the routing of your web traffic, perhaps by operating a dodgy wifi access point. In this case they could use the fake certificate to establish a secure communication channel with your client, allowing them to decrypt your messages, including any confidential information you might supply, before establishing a secure connection to the genuine site and passing on your messages. Like the "man-in-the-middle" in the lock analogy, the attacker is now able to use the two "secure" encrypted channels to read all of your messages.

To reduce the chance of this type of attack the genuine server owner is required to get their certificate digitally signed by someone trusted. The organisations that are allowed to sign certificates are called Certificate Authorities (CA). Having first provided evidence that they own the domain name, the site owner generates a public key and gets the CA to sign it. The signature is then included as part of the issued certificate.

During the SSL handshake the client will attempt to verify the signature using the CA's public key (a list of trusted CA's is preinstalled with your operating system for this purpose).

Having established that the certificate was signed by a trusted CA, the browser will then compare the hostname data contained in the url being requested, e.g smallworkshop.co.uk, with the server's identity as presented in the certificate. The browser will also check other details of the certificate to ensure it is valid, for instance it will confirm that it has not expired or been revoked.

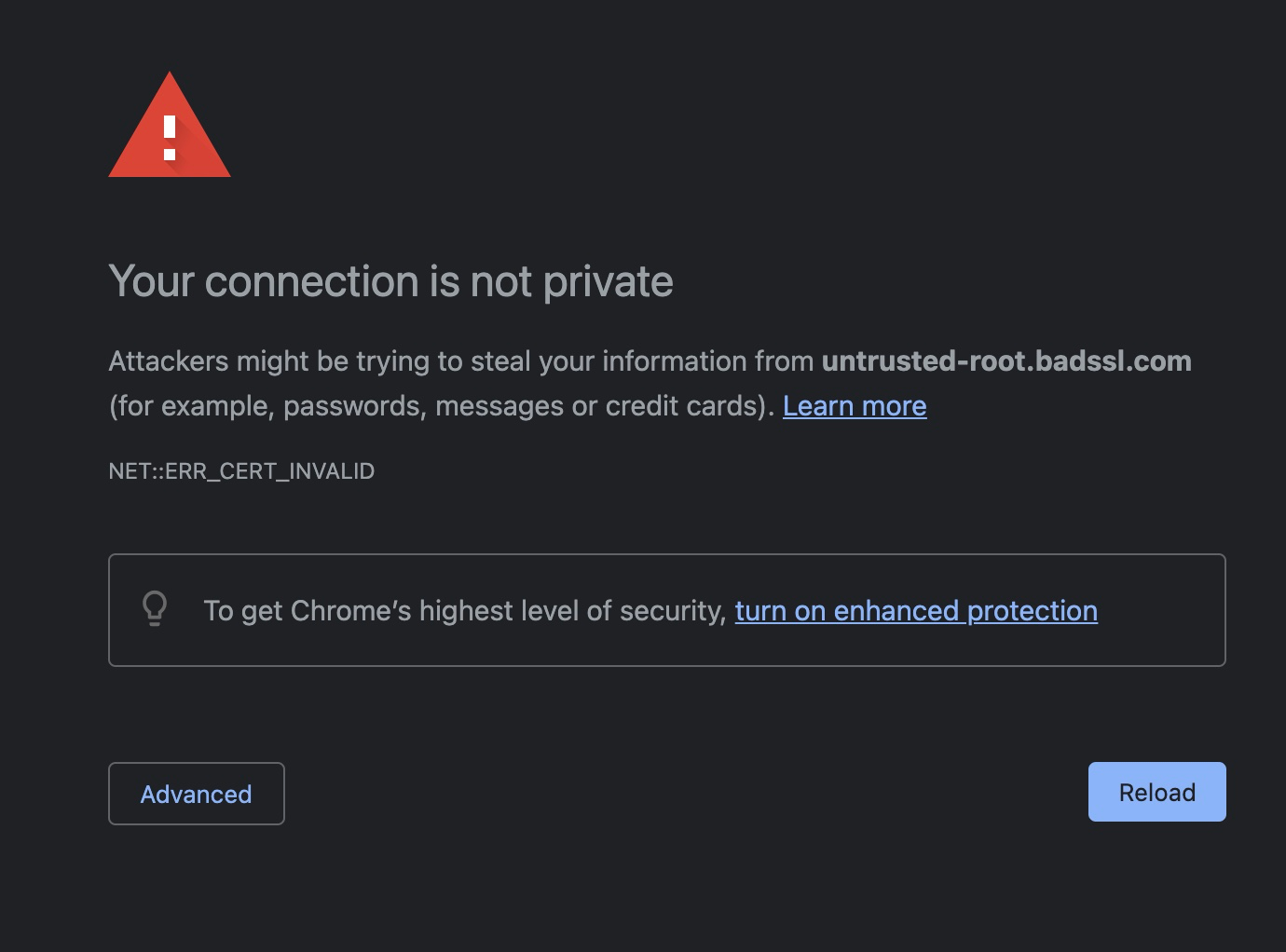

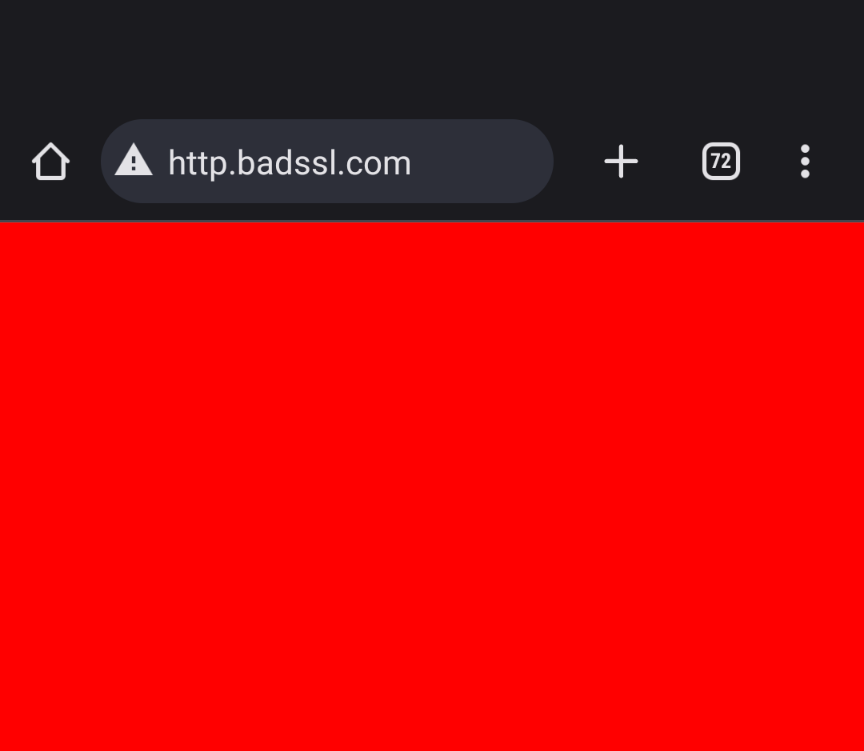

If any of these checks fail the browser will either terminate the connection or present the user with a warning:

Certificate validation

As mentioned earlier, although certificates allow us to establish a private communication with a server, and offer some reassurance that we are exchanging messages with the actual web site owner, not an impersonator, this does not mean we can assume the web site is trustworthy.

Many of us would like assurance that the digital entity we are communicating with is the same real-world organization we've interacted with in the past. How do we know that mybank.com is the same organisation that runs the high-street bank branch we opened an account with and not someone pretending to be them in order to trick us into handing over confidential information?

To try and solve this problem three different "levels" of certificate were created. The idea was that where lower levels of trust were required - for instance a blog site that does not collect end user data - then these sites could use a certificate that was issued with a minimum set of checks, but more sensitive sites, like commercial web sites, would submit to a more stringent set of checks. Although this approach sounds logical it has failed in practice, and it is instructive to look at why. The three levels of validation are:

DV (Domain Validation)

This is the minimum level of checks required to get a certificate. A DV certificate is granted when the applicant has proven they have control over the domain name.

The most common way for a CA to establish domain name ownership is to provide a token for installation on the web server at a pre-agreed location (e.g http://smallworkshop.co.uk/.well-known/acme-challenge/<TOKEN>). Assuming the CA can then retrieve the file it will issue the certificate.

These certificates are available free of charge (see Let's Encrypt below).

EV (Extended Validation)

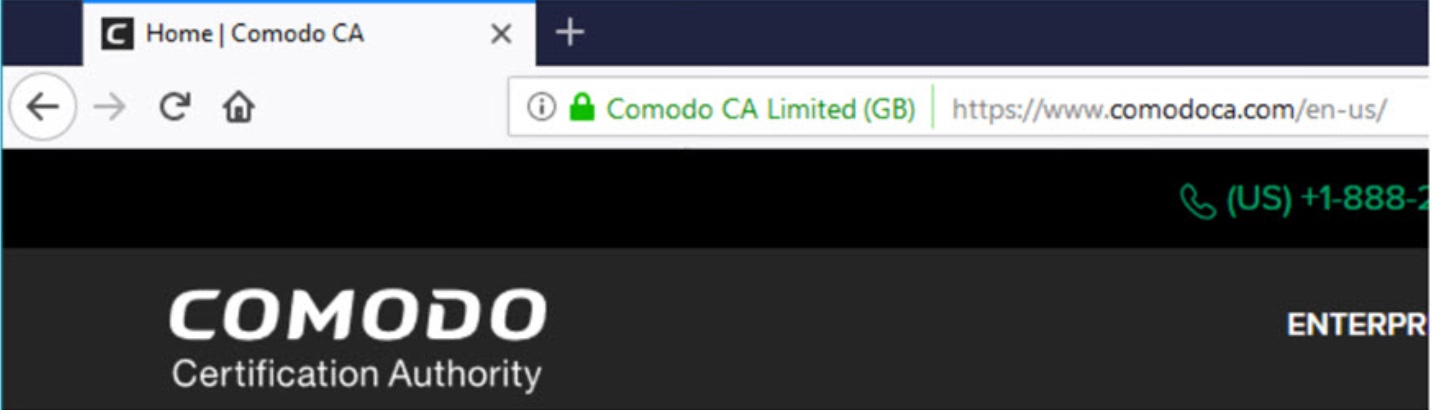

EV is the most expensive type of certificate, often costing hundreds of pounds per year. An EV certificate includes details of the business that requested it:

In addition to validating control of the domain name, EV certificates require the applicant to provide evidence to show that they are the actual business owner (for instance copies of bank account statements, company registration documents etc) and proof that the person requesting the certificate represents the company concerned.

OV (Organizational Validation)

Most CAs offer an intermediary level of validation, the OV certificate. These certificate still require some paperwork to be supplied but are less burdensome than the EV process and therefore cheaper. As with EV certificates the legal entity owning the domain can be seen on the certificate.

Why Extended Validation failed

The purpose of EV certificates is to give end users confidence that the website they are visiting is safe because it has been independently confirmed as being owned by a real-world business they are already familiar with. For this to be effective the users need both to understand that this is what the certificate shows and also to adapt their behaviour as a result (for instance, by being wary of websites without an EV certificate.)

Browser developers experimented with various visual cues to try and alert users that they were looking at a site with an EV certificate, and you may recall the days not so long ago when your browser search bar for these sites included a green padlock and and the verified legal identity of the company that owned it:

Unfortunately, research consistently showed that that most users didn't understand what these security indicators meant and also failed to adjust their behaviour when using sites that did not show them.

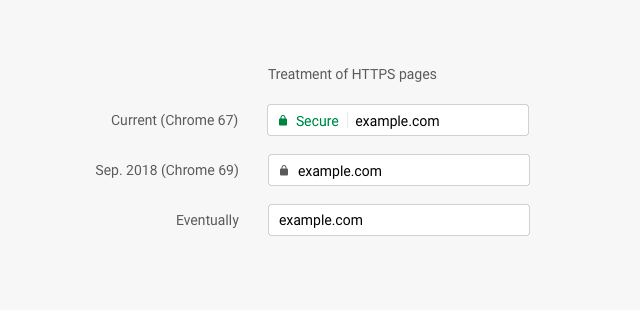

In 2016 Google concluded they were fighting a losing battle and abandoned the idea altogether, announcing a plan to gradually phase out the EV indicators in their Chrome browser.

As of 2022, all major web browsers (Google Chrome, Mozilla Firefox, Microsoft Edge and Apple Safari) have done the same and it is no longer possible to easily distinguish DV from EV or OV certificates.

Google's proposal argued that users should expect that the web is safe by default and that they will only need to be warned if there is an issue. When this stage was reached it should be possible to remove the security identifiers altogether:

Until HTTPS was ubiquitous, a security indicator (currently a grey padlock in most browsers) would be retained to show which sites were offering secure browsing and sites using HTTP would be show as "not secure":

This created a new problem in so far as owners of sketchy websites could make them look a bit more convincing by getting an easily obtained DV certificate and using the security indicator as (misleading) endorsement of the safety of their site.

The solution, slightly counterintuitively, involved making it even easier to get a certificate. The idea was to reduce the barriers to adopting HTTPS to such a degree that the point where 'safe by default' would be brought forward and security indicators could be dropped altogether.

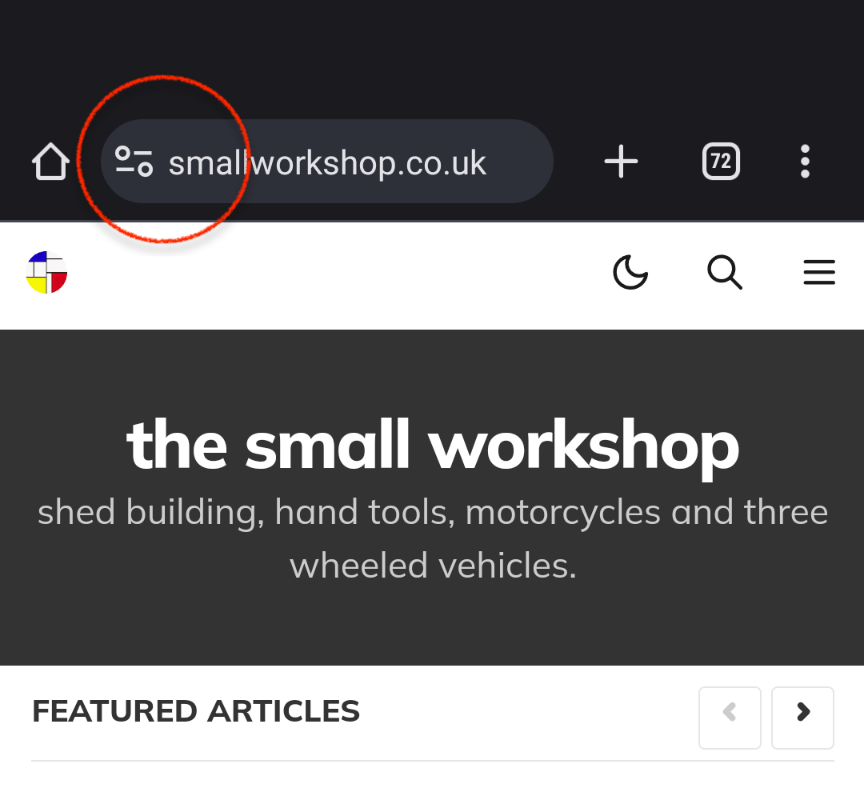

Edit September 2023: Google have now dropped the security indicator from the mobile version of Chrome.

the replacement for the old padlock symbol can be clicked to see certificate status. A triangle with an exclamation mark indicates a HTTP site

HTTPS Everywhere & Let's Encrypt

In 2014 in attempt to drive up HTTPS adoption Google announced plans to include it as weighting factor in their search algorithm. As can be seen in the video below, at this time it was still a royal pain in the arse to enable HTTPS on a server and this was a major obstacle to adoption, particularly amongst operators of small websites.

If you want ubiquitous HTTPS you need to make it easy and free, and a not-for-profit foundation run by the Electronic Frontier Foundation, Mozilla and others set out to do just that.

Let's Encrypt, was launched to the public in April 2016 and provides a free and automated service that delivers SSL/TLS certificates to websites.

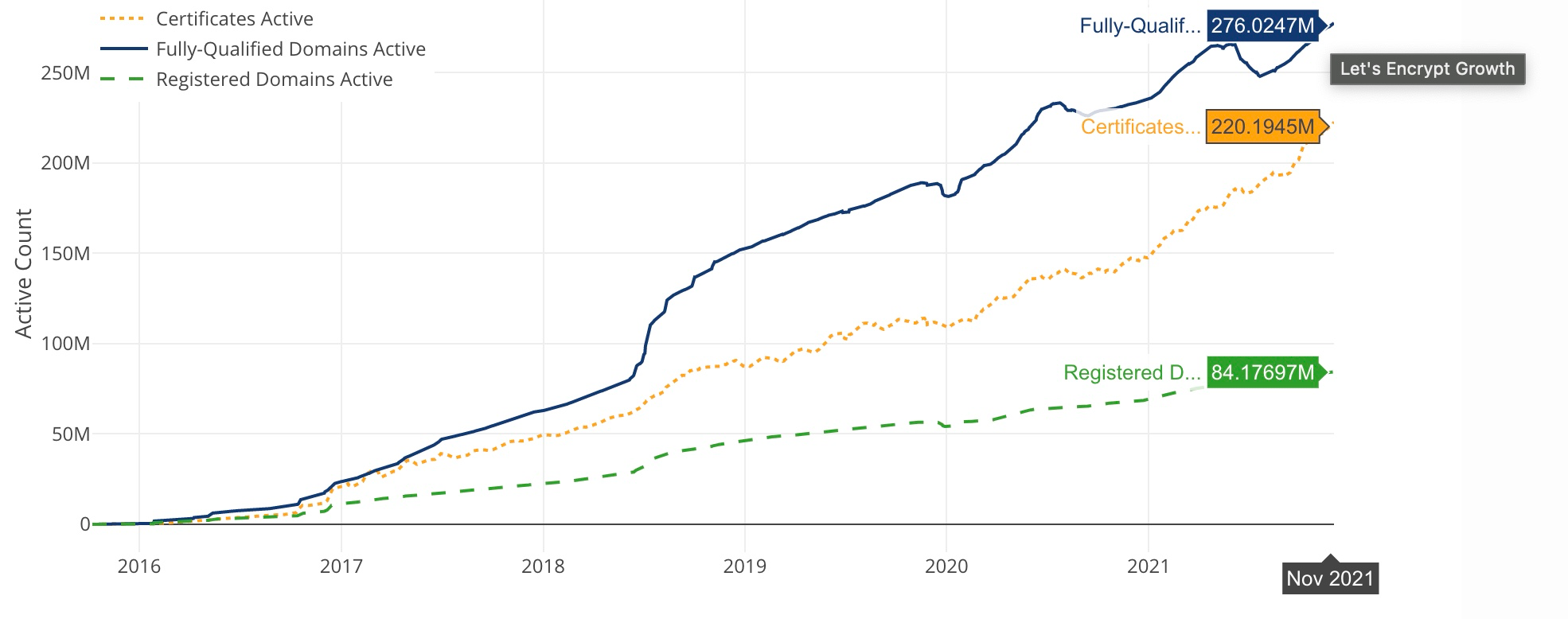

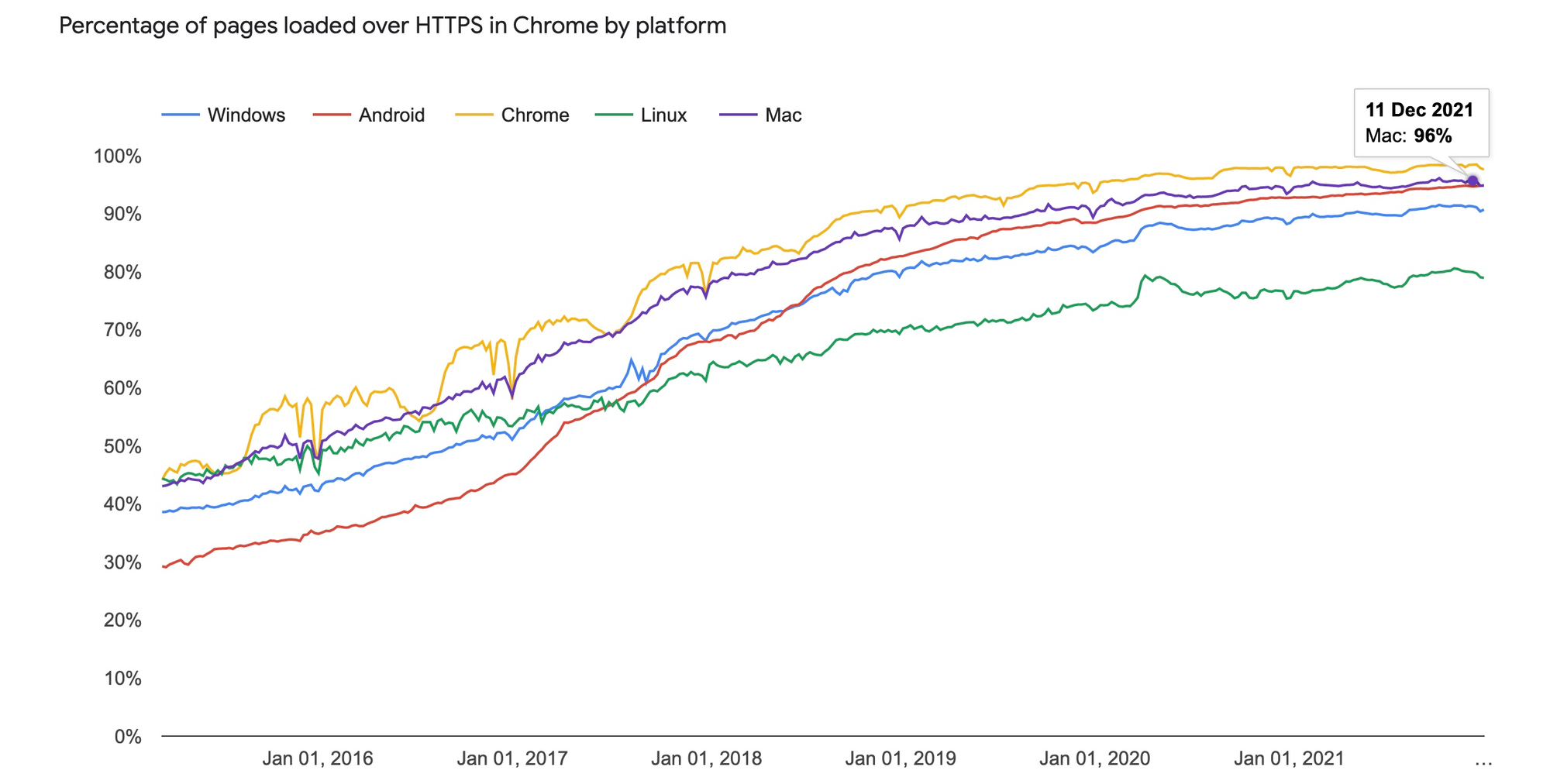

The launch of Let's Encrypt combined with the efforts of the browser developers to draw attention to 'not secure' HTTP sites, and google's inclusion of HTTPS as a search ranking factor have been helped to dramatically improve adoption:

Let's Encrypt stats: there are ~2bn websites and Let's Encrypt are used on around 1 in 8 of them / Google Transparency Report on the right

Since Let's Encrypt entered the market several companies, including Amazon, now offer very convenient ways of getting certificates for free.

Controversy

The strategy to drive HTTPS adoption by making it very easy to get free DV certificates and then doing away from the visual indicator in the browser that distinguished them from expensive EV certificates supplied by Certificate Authorities unsurprisingly annoyed the CAs. Now that Let's Encrypt are churning out hundreds of millions of certs for free, it is hard to deny that they have reduced the potential size of the commercial CA market.

Complaints also came from people concerned that the strategy of HTTPS everywhere might make part of the web's history inaccessible, for instance where small sites run by individuals without the know-how or inclination to update to HTTPS are left behind. A notable proponent of this argument is Dave Winer, one of the fathers of blogging. Part of his argument stems from unease that prominent role that Google has played in dictating the change. It is plausible that some abandoned content will never be upgraded to HTTPS, and also that at some point in the future browsers may refuse to load this content over an unsecure connection so that it will effectively be lost forever. The extent and likelihood of this risk is a matter of debate but one thing that is indisputable is that Google's support for HTTPS adoption has been very influential.

Tin foil hat section

How secure is HTTPS?

The Public Key Infrastructure is only as reliable as the internal processes used by the Certificate Authorities: the CAs need to protect their private keys and to take adequate steps to ensure only genuine certs are issued. Although they are subject to external audits and face commercial and reputational damage if they behave badly, failures on the CA side, although rare, are not unheard of.

Another potential vulnerability is that an attacker might change the list of trusted CAs installed in your operating system and use this as a way to trick you into using their own fake certificates. Under these circumstances, however, the attacker will need access to an account on your machine with sufficient permissions to change this data, in which case having a compromised list of trusted CAs is likely to be just one of the serious security problems you will be faced with.

The other ways for attackers to get around HTTPS boil down to:

- Trying to break the mathematics

- Finding (or inserting) mistakes in the way the protocols are implemented.

- Stealing private keys

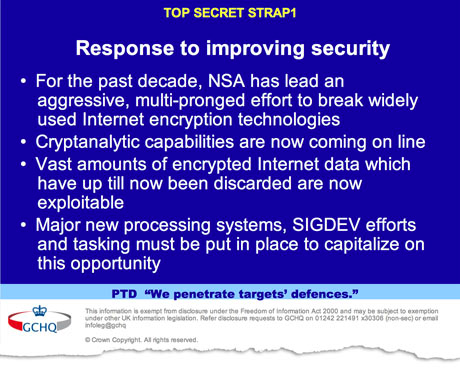

Documents leaked by former NSA contractor Edward Snowden revealed that at the time (2013) the NSA were decrypting huge amounts of SSL protected traffic.

patriotic Brits will be pleased to know that GCHQ was in on the action too!

No one knows for sure how they were doing it, but the leaked documents revealed that NSA were spending a lot of money to influence authors of commercial cryptographic solutions to make them exploitable via "back doors" in their software and hardware. Many crytpo experts also believe the NSA were trying to sabotage standards setting processes to introduce weakness into cryptographic standards that would be hard to detect but easy for those in the know to exploit. The more mundane, but perhaps more likely, explanation for their achievements is that they simply found ways to steal the secret keys for targets they were interested in, perhaps by finding (or planting) biddable employes with access to the secrets.

It is also likely that they had enough money to crack some of the encryption algorithms deployed at the time through brute force. Eran Tromer in a 2007 dissertation concluded it was "feasible to build dedicated hardware devices that can break 1024-bit RSA keys at a cost of under $1 million per device." and that a device of this type could crack a 1,024-bit key in one year (the NSA annual budget is allegedly around $10bn).

Improvements introduced into TLS 1.3 mean that a different key is used for each HTTPS session making this type of brute force attack ruinously expensive to do at scale and - unless someone finds some way of breaking the mathematics involved so that the number of computations needed to crack the keys is significantly reduced - most experts agree this is not a serious threat at the moment.

quantum threat! No, I don't understand quantum physics either. David Deutsch does though and about 35 years ago he proposed the idea of a "quantum computer".

This type of computer is a worry for cryptographers since, if they can be made to work properly, they promise to allow a huge number of calculations to be carried out simultaneously, significantly reducing computing times compared to conventional computers. For instance it would become much easier to find factors in a large number (this is the sum you need to do when cracking current cyphers). And by 'significantly' the promise is to reduce calculations that might take 1000s of years on today's computers into seconds when done using a quantum technology, making it possible to break all public key cryptography currently in use.

IBM, Microsoft and Google are researching the technology and excitingly some of the current implementations look like mad chandeliers. Here's IBM's:

There is still a debate amongst scientists about whether a large-scale quantum computer is physically possible, but who knows what the boffins will come up with next? NIST are so worried they are running a bake-off to see if someone can come up with "quantum proof" cryptographic cipher.

Get your own certificate

Notwithstanding the above, tampering and surveillance attacks are not just theoretical risks and are commonplace on the web. But for a standard blog where no personal data is stored you might well ask why you need to bother, particularly when, for now at least, browsers will continue to load HTTP content in any case.

So why bother? The answer is that free services like Let's Encrypt have made it so simple there is really no reason not to.

There is a good overview of how Let's Encrypt works here:

The recommended client for managing your Let's Encrypt certificate is Certbot. The standard installation will install the client on your web server and set up a scheduled job to renew your certificates on a regular basis. The instructions are here and the whole process takes a few minutes:

This site is created using Ghost which runs on an Nginx web server. The certbot client can be used to update your nginx configuration files automatically with details of the location of the Let's Encrypt keys and this should work for standard configurations. If you want to configure Nginx for HTTPS yourself, you can read about it here:

References

| 1⏎ | SSL was renamed TLS for reasons now lost in the mist of time. The protocol is still frequently referred to as SSL or sometimes as SSL/TLS |

| 2⏎ | Ellis first proposed his scheme in a (then) secret GCHQ internal report The Possibility of Secure Non-Secret Digital Encryption in 1970. His theory was subsequently implemented by one of his colleagues, Clifford Cocks. The implementation is essentially the RSA Algorithm |

| 3⏎ | cryptographic hash algorithms map data of arbitrary size to fixed-size values. The algorithms are one-way, that is it is easy to calculate the hash, but difficult or impossible to re-generate the original input if only the hash value is known. A parallel in the real world are fingerprints which can be used to identify a person, however on their own can't be used to identify other physical attributes of the person. |

| 4⏎ | TLS 1.3 adds some additional steps to the protocol that improves performance by reducing the number of round trips needed to negotiate the session and this makes the process a bit harder to follow than 1.2. TLS 1.3 also offers a degree of extra protection - known as forward perfect secrecy - should the private key on the server ever be stolen (a new shared secret is generated for each session and this makes life more difficult for attackers who are recording encrypted data in the hope they can get hold of the private key at some point in the future). Here is a good explanation of the difference betwween 1.2 and 1.3. |

| 5⏎ | it is more efficient to use symetric encryption after the initial handshake has been done using asymetric encryption |